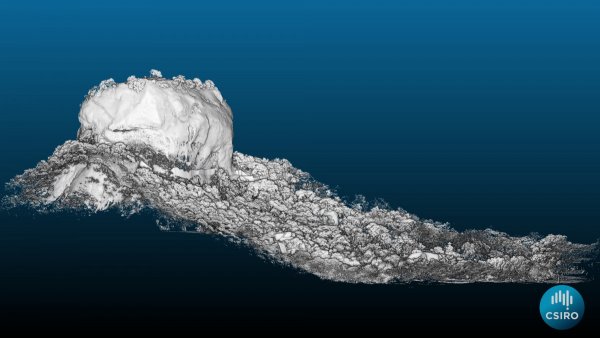

It is probably common knowledge that a person using a smartphone today has more computing power at their disposal than the U.S. Space Programme did during the 1960s. The National Aeronautics and Space Administration (NASA) used five IBM System 360 model 75J computers, each of which cost US$ 3.5 million (about US$ 25 million today), for the Apollo series of Moon missions. The System 360 series, which came out in 1965, is credited with creating a broad market for information technology (IT) products.

A System 360 computer took up a substantial amount of space, a largish office room being required to house its multiple discrete elements, and needed constant cooling. Each model 75 had 8 megabytes (MB) of memory and a data processing speed of 2.54 million instructions per second (MIPS). By way of contrast, the hand-held iPhone 5s smartphone, which came out in 2013, has 1,024 MB of memory and a processing speed of 18,200 MIPS.

IBM System/360 computer in use at Volkswagen’s Woldburg works. Image courtesy wikimedia.org

Punched Cards

Possibly even more astonishing than this comparison of memory size and speed is the difference in ease of operation. Today’s smartphone touch-screen display also acts as the input, so there is essentially a single user interface, literally at one’s fingertips. The user interface of a computer 50 years ago, on the other hand, was fairly complex.

British HEC 1202 computer. Image courtesy chilton-computing.org.uk

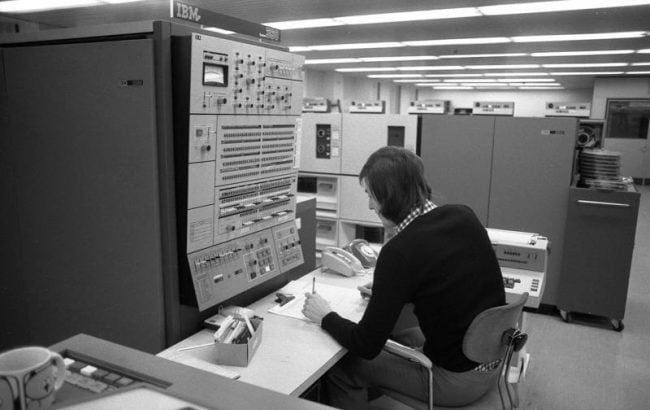

Right up to the 1970s, the principal method of feeding data into a mainframe was by use of punched cards, also known as IBM cards. This was a rectangular card with round corners – except for one which had a diagonal cut-off (rather like a SIM card) – 187.3 mm long and 82.6 mm wide. It was divided into 80 columns and 12 rows, and each position could be punched with a rectangular hole in a special typewriter, called a card punch.

The punched card owed its origin to the automated Jacquard looms, used for weaving cloth, of the early 19th century. Holes punched in sequences of cards were used to “program” the loom, each card standing for one row of the weave design. This card was adopted for use in early “mathematical engines” and was taken up by Herman Hollerith, who invented a “tabulating machine” and founded the predecessor of IBM in 1896.

Hollerith’s electro-mechanical tabulating machine, which was used to process the results of the 1890 US census, set the standard for computers for the next half-century. Similar electro-mechanical computing machines were common in the early part of the 20th century. The British Tabulating Machines Company used this technology during the Second World War to build “bombes”, which were used by the armed forces to decipher encrypted Nazi radio traffic. The final IBM electro-mechanical accounting machine was withdrawn from production in 1976. IBM took on the essential format of the modern punched card in 1928, and set it in its final form in 1964. This card became an icon of information technology in the mid-20th century.

IBM punched card. Image courtesy wikimedia.org

User Interface

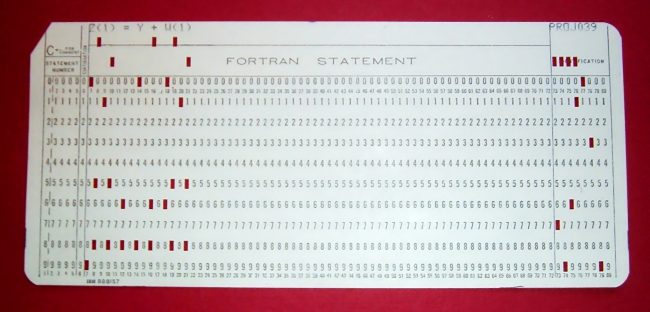

The IBM 360 series used an IBM 29 card punch, which looked like a typewriter, to punch out the holes. An IBM 59 card verifier checked whether each card was punched correctly. The cards were then inserted into a card reader, which fed the data into the central processing unit (CPU). The input interface was completed by the console, part of the CPU, which was a panel made up of flashing lights and switches, which could be used to adjust programs and, in an emergency, to shut down the computer. The output came in the form a print-out, a sheet of paper with perforated edges which was fed, from a box full of folded continuous stationery, through an IBM 1403 line printer.

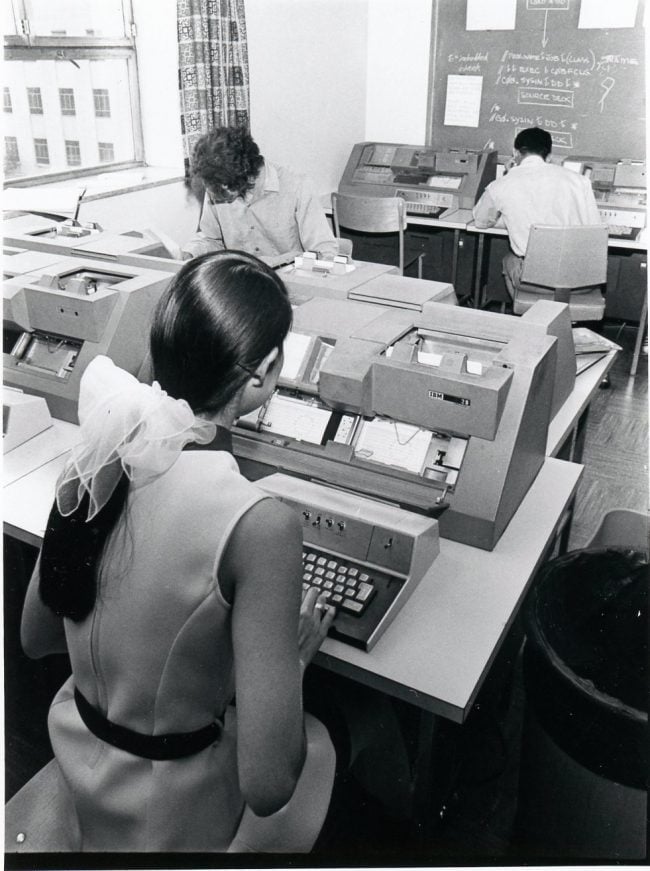

Most other mainframe computers, such as the British ICL 1900 series, widely used in Commonwealth universities, were similar in operation and comprised the same elements, although the hardware would be different. This bulky and complicated array of machines remained the user interface for computing well into the 1980s, punched cards only being phased out of production models in 1984. People would queue up to hand over their hand-written program-sheets to the card punch operator, and wait for the printed output to come back to them. As can well be imagined, an error in programming could take a considerable time to be corrected!

Card punch operator. Image courtesy library.ryserson.ca

Video Interfaces

In some models, for example those supplied to NASA, a cathode-ray tube (CRT) video monitor – or video display unit (VDU) – also provided an output. This would be monochrome, not colour – blue and white or green and white, like the screens in the film The Matrix.

Scientists in the Soviet Union took the first step towards the modern user interface in 1969, when the MIR-2 mini-computer rolled out. While considered a very small computer at the time, performance-wise it was comparable to the IBM 360 series. Input was via punched tape rather than punched cards, and the output was also a punched tape, which was fed into an electronic typewriter for a printed output. What made it special was the innovative combination of a keyboard with a VDU, and a light-pen for correcting documents and drawings. A light pen contains a light sensor, which, when placed against the screen, picks up the light from the screen and enables the computer to locate its position. This was the predecessor of the computer stylus and, ultimately, the touchscreen.

Soviet Mir-2 computer with light pen. Image courtesy uacomputing.com

Sri Lanka Enters The Computer Age

In Sri Lanka, electro-mechanical tabulating machines were used well into the 1970s by large organisations, such as the Ceylon Transport Board, for managing salaries, accounts, and stores. While this speeded up business processes, it nevertheless required the storage of vast quantities of data in physical form.

In 1968, the first modern electronic computer, an ICL 1901A, with similar performance to the IBM 360 series, was acquired by the State Engineering Corporation. In 1971, the Department of Mathematics of the Peradeniya Campus of the University of Ceylon (as it was then) obtained an IBM 1130 machine, which was considerably cheaper that the 360 series, had inferior capability, and still relied on punched cards. It had just 32 kB of stored memory and 0.5 MB of memory on magnetic disk. However, it was optimised for mathematical and scientific functions, and was just what the newly-created University Computing Centre required at the time. The Katubedda Campus (now the University of Moratuwa) got a similar IBM 1130 soon after. Later, the Department of Examinations received an IBM 360.

These machines were used in the early 1970s to process General Certificate of Education (GCE) results. Initial difficulties associated with unfamiliarity with the equipment were exacerbated by the cumbersome error-correction process. This led to some spectacular mistakes, which became the stuff of speculation at the time. One rumour had it that a novice Buddhist monk got distinctions at the GCE (Ordinary Level) examination for Needlework and Domestic Science. Teething problems such as these were gradually overcome.

The IBM 1130 system, similar to the one at Peradeniya. Image courtesy ed-thelen.org

The commercial sector began to adopt computers in the 1980s. However, companies proceeded with caution. In one company of which this writer had personal experience, paper records continued to be kept as a back-up, which lessened the impact of computerisation.

It was at this time that Sri Lanka first became an IT-related outsourcing destination, providing its services mainly to London-based outfits. Nevertheless, popularisation of computing in Sri Lanka had to wait for the arrival of second-generation personal computers.

Personal Computers

During the 1970s, the keyboard/VDU combination became standard, and the personal computers which came into the market in the latter part of the decade adopted this configuration. Most of them used external audio cassette players for loading programs and storage, with external floppy-disk drives as options, with a separate monochrome VDU. The iconic Apple II came out as a CPU with a keyboard, which had to be connected to a separate colour VDU. The ground-breaking Commodore Pet came out as the first personal computer in which all features were built-in.

1977 Commodore Pet. Image courtesy wikimedia.org

These computers all used a command-line interface; that is, the commands typed into the computer, and the computer’s responses and prompts, were displayed on the monitor. From the early 1970s, researchers at the Xerox Corporation developed a graphical user interface (GUI), using a mouse. The Royal Navy developed a trackball device during the Second World War, and this was inverted by the German Telefunken company in the late 1960s to create the first mouse. However, the device only became widespread after Xerox introduced it into the market.

In 1984, the Apple Macintosh operating system burst into the world of personal computing. This was the point after which the GUI took on its final form, with windows, icons which could be manipulated, menus, pointing devices and the “drag and drop” function. With the exception of a few details, such as shrinking the electronics and inventing the touch-screen, all the elements of the modern handheld smart device were present. The computer user interface of thirty years ago would be familiar to the modern smartphone user today, quite unlike that of 50 years ago.

Featured image: The Columbia University computer centre in 1965. Image courtesy columbia.edu